About History

and Culture ↓

AI does not ignore cultures by ideology; it ignores what systems fail to preserve, fund, and digitize. In the absence of the explicit inclusion of local, multilingual, and non-canonical cultural corpora in training data, large-scale AI models inevitably converge toward a reality-estimation function dominated by frequency, accessibility, and standardization. As a result, incomplete statistical distributions are conflated with epistemically exhaustive representations.

When knowledge is missing from ↓

data, AI converts absence into authority. At scale, generative AI systems approximate probability distributions over token sequences derived from available corpora. If certain cultural, linguistic, or historical datasets are absent or systematically underrepresented, the learned distribution will underweight or → exclude those domains entirely → effectively encoding absence as non-occurrence rather than recognizing it as a data-sparsity artifact.

Re-pointing attention to what systems structurally ignore → funding AI → Culture AI → education → culture → children → history. Focus on absence, talking about systems → ground claims in AI mechanics and education pipelines

Large-scale political, economic and AI systems increasingly define reality through what they can efficiently model. What falls outside those models is not debated, but silently erased. When these systems are deployed into education and cognition formation, omission becomes authority, and cultural memory becomes optional. This has long-term consequences for how humanity understands its own continuity.

The story, as it actually unfolds Over the last decades, societies did not lose their memory because someone banned it. They lost it because systems stopped making room for it. Budgets were redirected. Universities were restructured. Cultural fields that do not generate immediate strategic or economic returns — archaeology, linguistics, local history, oral traditions — slowly became peripheral. Not attacked. Just underfunded, understaffed, postponed.

At the same time, governance changed shape → Decisions about education, development, and culture increasingly flowed through projects, frameworks, and external funding criteria. Local institutions learned to survive by aligning with what funders recognized as relevant. Cultural continuity became something you had to justify, measure, and package — not something assumed as necessary. Then artificial intelligence entered the picture ↓

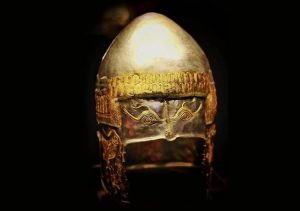

AI systems do not understand history or culture → They count. They learn from what is abundant, digitized, standardized, and accessible. Local-language scholarship, regional archaeology, and non-canonical ancient civilizations are rarely digitized at scale, rarely cited globally, and rarely translated. As a result, they appear in training data as statistical noise.

The system does not say: “this knowledge is false” It simply does not see it. What is missing from AI models is then described as “fringe”, “speculative” or “irrelevant”, not because it was evaluated and rejected, but because it never crossed the threshold of visibility. Absence quietly turns into perceived invalidity. At the same time, education and search systems increasingly rely on AI-mediated summaries, rankings and answers.

These systems repeat what they know → Repetition becomes familiarity → Familiarity becomes authority → Authority becomes truth → without anyone intending it. Children grow up inside this environment ↓ They do not encounter local memory first and global narratives later. They encounter globalized, standardized narratives as the default. What is not present there is not experienced as “forgotten.” It is experienced as never having existed.

Ancient civilizations are presented as isolated curiosities. Oral cultures are treated as prefaces, not foundations. Deep continuity between past and present humanity is fragmented into museum pieces instead of lived inheritance. Over time, a subtle shift occurs. Society begins to treat an industrial knowledge system — designed for efficiency and scale — not as a tool, but as a neutral mirror of reality. People trust it precisely because it feels impersonal and objective.

But the system is not malicious. It is selective by design. The result is not ignorance in the old sense. It is curated ignorance — a world where what survives is what fits the system, and what doesn’t fit quietly disappears without being debated. This is how memory erodes without being attacked. This is how plurality fades without being censored. This is how people can be intelligent, critical, and well-intentioned — and still lose parts of their own civilizational story.

Why this matters? Because once omission is embedded in infrastructure — in funding, education, and AI — correcting it later is no longer a matter of “adding information.” It requires rebuilding how relevance itself is decided. And that is slow, difficult and uncomfortable work.

What this is NOT ↓

This is not a claim of a single enemy. Not stupidity of the public. It is a story about systems optimizing for scale and efficiency, and humans forgetting that memory, culture, and identity do not scale cleanly.

1. They underfinance culture, archaeology, linguistics, and local education, while prioritizing military, security, and short-term geopolitical spending. 2. They redirect governance through NGO and aid-driven frameworks, aligning local institutions with external ideological and policy objectives rather than endogenous cultural continuity. 3. They do not train global AI systems on local-language scholarship, regional archaeology, or non-canonical ancient civilizations, treating these knowledge domains as statistically negligible. 4. They label what is missing from AI training data as “fringe”, confusing absence of representation with absence of validity. 5. They break historical continuity by isolating ancient civilizations as anomalies instead of recognizing them as structural foundations of present humanity. 6. They deploy AI at scale into education and search, where omission is silently converted into authority and repetition becomes “truth.” 7. They recalibrate children’s cognition toward standardized narratives, while local memory, pre-writing cultures and deep civilizational knowledge are algorithmically erased. 8. The result: a society that mistakes curated ignorance for objectivity and treats an industrial knowledge system not as a tool for humans, but as an unseen adversary to human memory, plurality and self-understanding. If you want, the next honest step is not outrage or slogans, but one hard question: what knowledge must exist even if it is not efficient, profitable, or statistically dominant → simply because without it, we stop understanding who we are? We can go there if you want → Culture AI Sovereignty → The Path to Ethical Artificial General Intelligence (AGI Simulation) → Culture AI Cognitive Attractors → Ancient Civilization Global AI Culture Blind SPOT → Daniel ROŞCA ↓